Beyond the Hype: My Honest Journey Into the "All-in-One" Promise of MakeShot AI

We have all been there. It is 2:00 AM, your coffee is cold, and you are staring at a blank screen. You have a brilliant concept for a brand video or a visual narrative locked in your mind—the specific lighting, the camera movement, the emotional texture. But the gap between your imagination and the screen feels like a canyon.

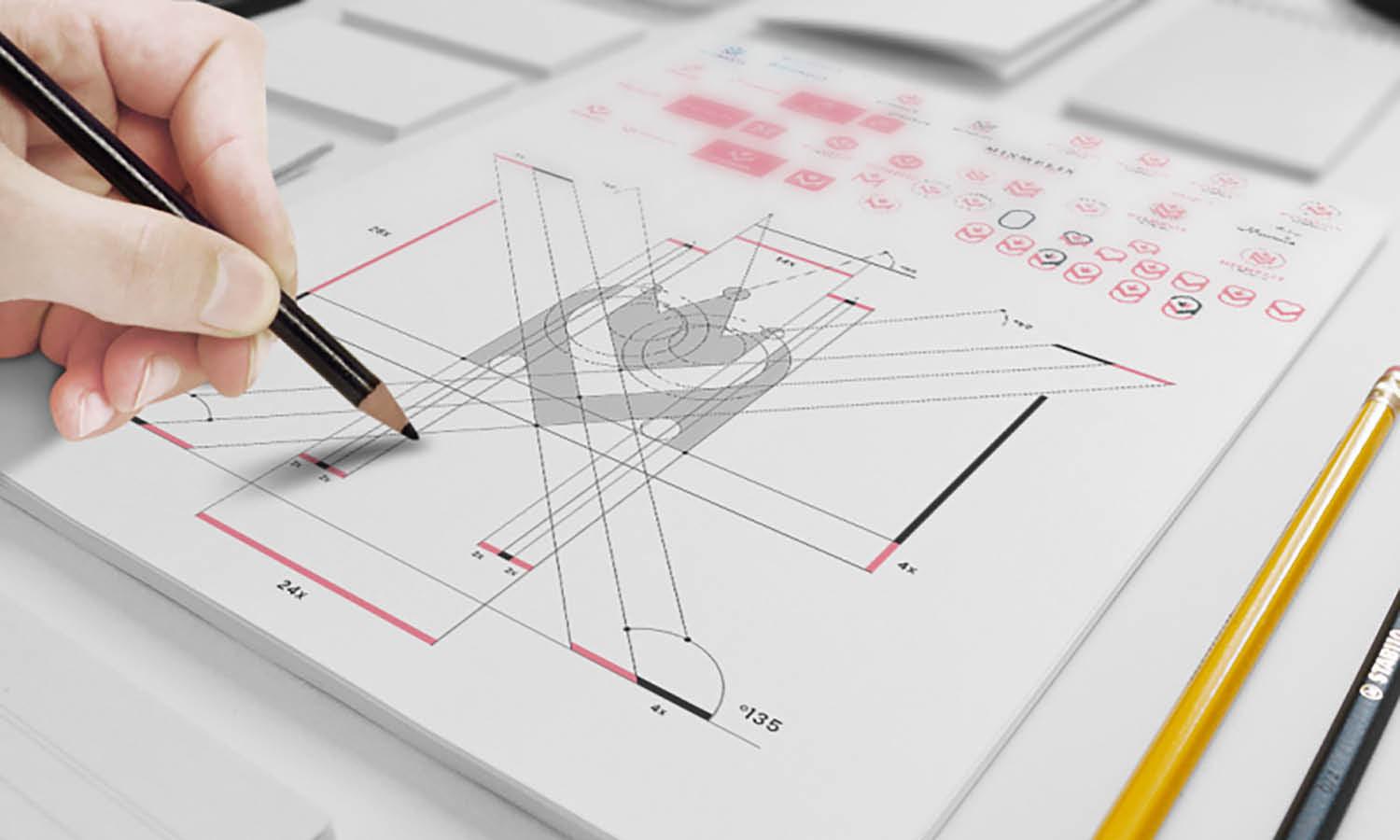

For years, bridging that gap meant navigating a labyrinth of expensive software. You had to be a writer, a director, and a VFX artist all at once. Even with the explosion of AI tools recently, the workflow has felt fragmented and exhausting. You might use one tool for static images, another for animation, a third for upscaling, and a fourth to edit it all together. It was less "creative flow" and more "file management fatigue."

This is where I stumbled upon MakeShot. I didn't want another "magic button" that promised the world and delivered glitchy, morphing nightmares. I was looking for a cohesive studio environment. After spending the last two weeks deep-diving into its ecosystem, I have realized that while it isn't perfect, it represents a significant shift in how we approach digital storytelling.

What is MakeShot.ai, Really?

To understand how it works, we need to look past the marketing jargon. At its core, MakeShot acts less like a single generator and more like an aggregator or a high-end command center.

Instead of locking you into a single proprietary algorithm that might be good at anime but terrible at photorealism, the platform positions itself as a unified interface accessing some of the most advanced models available—referencing powerhouses like Veo 3 and Sora 2. Think of it less like hiring a single artist and more like stepping into the role of an art director who has a team of specialists ready to execute your vision. You provide the direction; the engine selects the best neural pathway to build it.

The "Swiss Army Knife" Approach

In my testing, the immediate appeal was the consolidation. Usually, I have five tabs open: one for Midjourney, one for Runway, one for a script writer, and so on. MakeShot attempts to bring the text-to-video, image-to-video, and image generation workflows under one roof. It simplifies the technical stack, allowing you to focus on the story rather than the *software*.

My Hands-On Experience: The Good, The Bad, and The Surprising

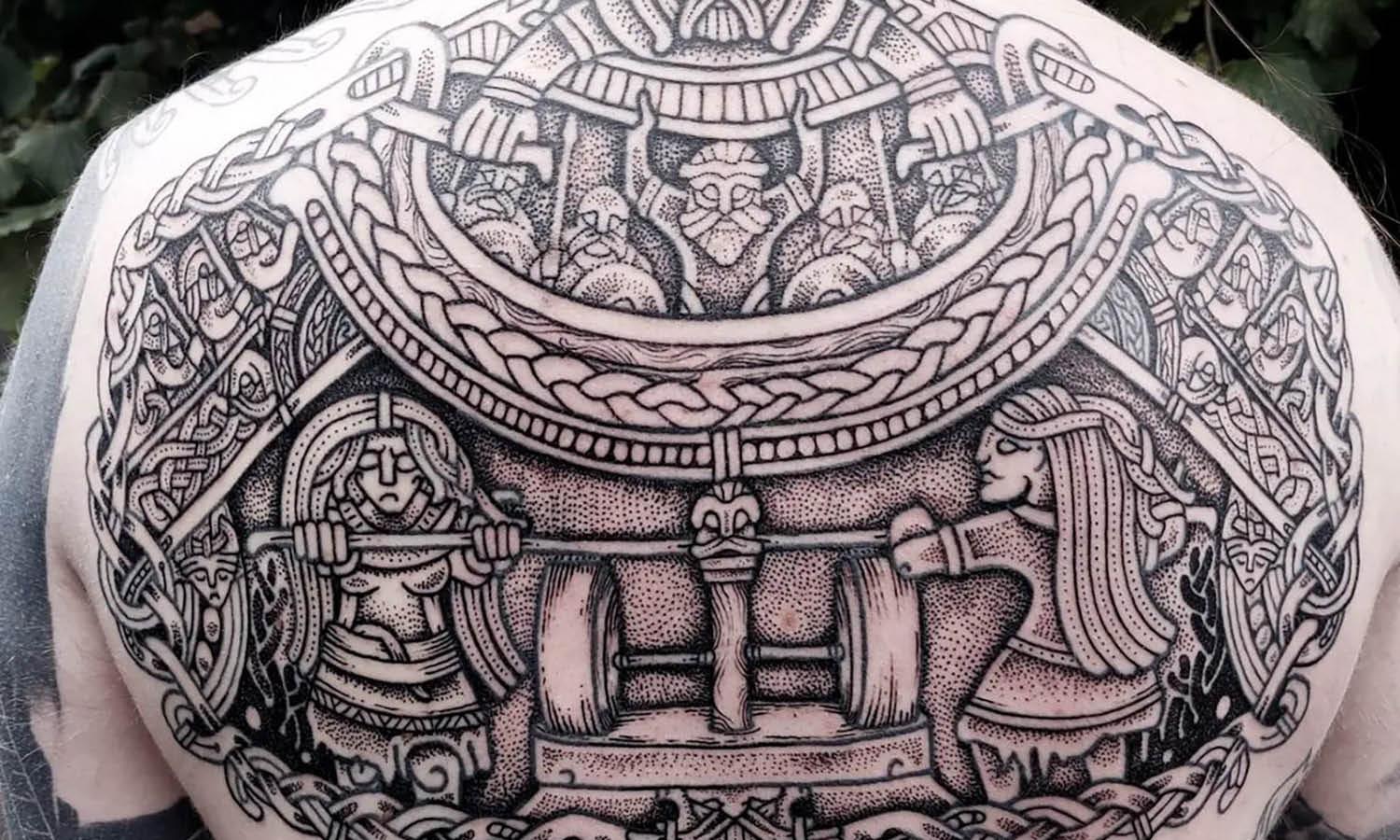

I decided to put the platform to the test with a specific, challenging project: a 15-second teaser for a fictional sci-fi noir series. This genre is notoriously difficult for AI because it requires balancing high-contrast lighting (chiaroscuro) with complex environmental physics like rain and fog.

The Prompting Experience

The interface is clean, almost deceptively simple. I typed in:

"Cinematic tracking shot, rain-slicked neon streets of Tokyo, 2050, a detective in a trench coat walking away from camera, heavy atmosphere, reflection in puddles, 8k resolution, highly detailed."

Observation on Physics and Lighting

In many AI tools I have used previously, "rain" often looks like static noise overlaid on the screen. In my generated clip using MakeShot’s high-end model setting, the water physics were surprisingly grounded.

The reflection in the puddles didn't just mirror the image; it distorted naturally with the ripples caused by the falling rain. It wasn't 100% photorealistic—if you squinted, the texture of the trench coat looked a bit too smooth, lacking the chaotic imperfections of real fabric—but the lighting interaction was leaps and bounds ahead of what I was using just six months ago. The neon signs cast a diffuse glow that wrapped around the character correctly, suggesting the model understands 3D spatial relationships, not just 2D image synthesis.

Motion Consistency and Stability

This is usually the dealbreaker. We have all seen AI videos where a person's hand turns into spaghetti or a face morphs into a different person mid-turn.

In my tests, the stability was notable. The character's walk cycle felt weighted; there was a sense of mass to the movement. It wasn't completely free of artifacts—there was a slight, shimmering "heat haze" effect in the background buildings that shouldn't have been there—but the subject remained coherent throughout the clip. This consistency is critical if you plan to use these clips for anything beyond a quick social media post.

Comparative Analysis: MakeShot vs. The Field

To truly understand where this tool fits in your toolkit, we need to look at it alongside the traditional workflow and other generic AI generators.

Feature & Workflow Comparison

|

Feature |

Traditional CGI/Editing |

Generic Single-Model AI |

MakeShot.ai Experience |

|

Learning Curve |

Steep (Years to master Blender/AE) |

Low (Minutes) |

Moderate (Requires prompt engineering skill) |

|

Workflow |

Fragmented (Multiple apps required) |

Isolated (One specific look only) |

Unified (Images & Video in one flow) |

|

Render Time |

Hours to Days |

Minutes |

Minutes (Varies by model load) |

|

Control |

Absolute Pixel Control |

"Slot Machine" Randomness |

Directed (Better adherence to complex prompts) |

|

Cost Efficiency |

High ($$$$ + Labor) |

Low ($) |

Medium (Subscription based on model tier) |

|

Motion Quality |

Perfect (Manual) |

Often "Jelly-like" |

Stabilized (Subject consistency is prioritized) |

The Reality Check: Limitations You Should Know

I believe in managing expectations. If you go into MakeShot expecting it to replace a Christopher Nolan film crew tomorrow, you will be disappointed. It is powerful, but it is not magic.

The "Gacha" Element

Despite the advanced models, AI generation still has an element of randomness. I had to run my "detective" prompt three times to get the perfect shot.

- Attempt 1: The lighting was perfect, but the character had three arms for a split second.

- Attempt 2: The motion was great, but the city looked too cartoonish.

- Attempt 3: The "Goldilocks" shot—moody, stable, and usable.

You are paying for the capacity to create, but you still need patience to curate. It is not always "one click, one masterpiece."

Text Rendering

While getting better, generating legible text inside the video (like a neon sign specifically reading "HOTEL") is still hit-or-miss. In my test, the sign came out as "HOTLE" or alien hieroglyphics. It is often better to use the AI for the visuals and add specific text in post-production rather than relying on the AI to render typography perfectly within the scene.

Who Should Actually Use This?

For Marketers and Agencies

If you need to create mood boards, animatics, or social media content that stops the scroll, this is a powerhouse. The ability to rapidly iterate on visual concepts without waiting for a render farm is a game-changer. You can test ten different visual styles for a client in the time it used to take to mock up one.

For Indie Filmmakers

It serves as an incredible tool for "pre-visualization." You can show your cinematographer exactly what you mean by "dystopian sunset" without drawing a single storyboard. It bridges the communication gap between your brain and your crew.

Final Verdict: A Tool, Not a Toy

MakeShot feels like a glimpse into the near future of content creation. It strips away the technical barriers of video production, allowing us to focus on the narrative.

Is it flawless? No. It is a sophisticated engine that requires a skilled driver. But when you get the prompt right, and the model clicks, the result is something that would have cost thousands of dollars and weeks of time to produce just a few years ago.

If you are willing to embrace the iterative process and want to consolidate your AI workflow, it is well worth the exploration. The landscape of AI is moving fast—don't just watch from the sidelines. Jump in, test the limits, and see if you can break the creative deadlock.