5 Data-Driven AI Video Generation Trends Defining 2025

Article Summary: This article analyzes 5 key trends in AI video generation for 2025. It covers the shift to consistency, the importance of multi-model ecosystems, and the rise of "AI Directing," offering data-driven insights and practical tips for marketers and creators.

The novelty phase of generative AI is over. We have moved past the era of "look what this robot made" to a more mature, demanding landscape where utility and ROI are king. As we navigate through 2025, AI video generation trends are being shaped not just by what technology can do, but by what professionals need it to do. Data shows a massive surge in adoption, with the global AI video market expected to grow at a compound annual growth rate (CAGR) exceeding 35%.

For marketers, filmmakers, and digital strategists, understanding these shifts is crucial. It is no longer about simply generating video; it is about integrating AI into complex, high-stakes workflows. Platforms like Genmi AI are at the forefront of this shift, providing the infrastructure for the next wave of digital storytelling.

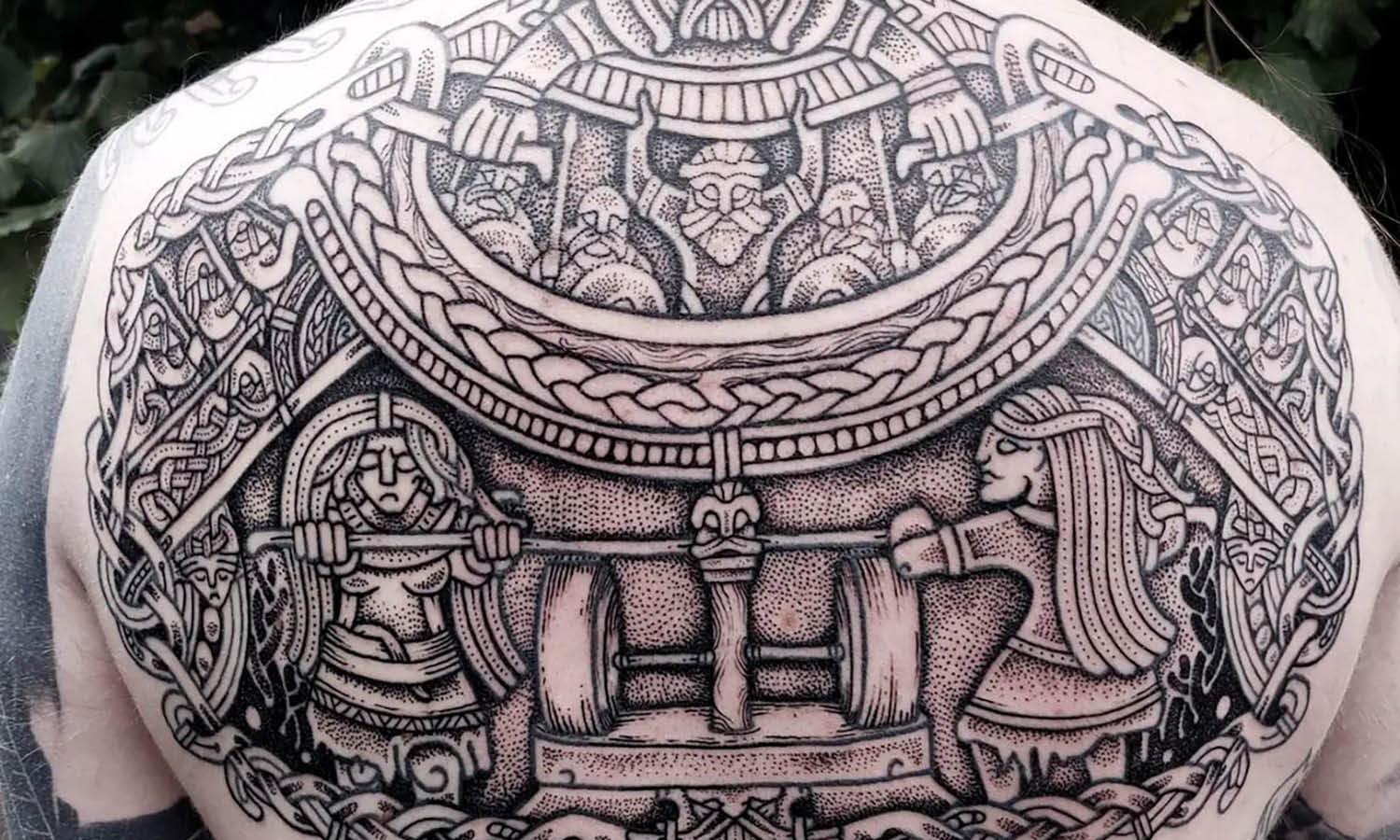

1. The Shift to "Controllable Consistency"

In 2024, the biggest complaint about AI video was the "morphing" effect—characters changing faces or clothes between frames. The dominant trend for 2025 is consistency.

Newer models are prioritizing temporal coherence. This means if you generate a character in frame one, they look identical in frame 100. This shift is driven by the "Image-to-Video" workflow, where a static reference image acts as an anchor. This allows brands to use AI for serialized content and storytelling rather than just abstract, one-off clips.

✨ Key Tips

- Always start with a high-resolution "seed" image rather than a raw text prompt for brand assets.

- Use negative prompts to explicitly forbid "morphing" or "blurring."

2. The Rise of the "Model Hub" Ecosystem

One size rarely fits all in creative production. A major trend is the move away from single-model dependency. Creators are realizing that Model A might be excellent for anime-style animation, while Model B is superior for photorealistic drone shots.

Instead of subscribing to five different services, users are flocking to aggregators that offer access to multiple top-tier models (like Sora, Veo, or Seedance) within a single interface. This "Hub" approach allows for greater flexibility and ensures you always have the right tool for the specific aesthetic you need. You can see this in action by exploring various engines like Sora models.

3. Hyper-Personalization at Scale

Data-driven marketing is colliding with generative video. We are seeing a trend where AI video generation trends are powering hyper-personalized advertising. Imagine an e-commerce video that doesn't just show a generic shoe, but dynamically renders the shoe in the exact color the user was just browsing.

This isn't sci-fi; it's the current trajectory. AI enables the creation of thousands of video variations from a single core asset, tweaking text overlays, voiceovers, and visual elements to match specific audience segments.

Practical Techniques

- Modular Scripting: Write scripts with interchangeable "slots" (e.g., [City Name], [Product Benefit]) that AI can swap out.

- Asset Libraries: Build a library of background plates and overlay elements that AI can mix and match.

4. From "Prompting" to "Directing"

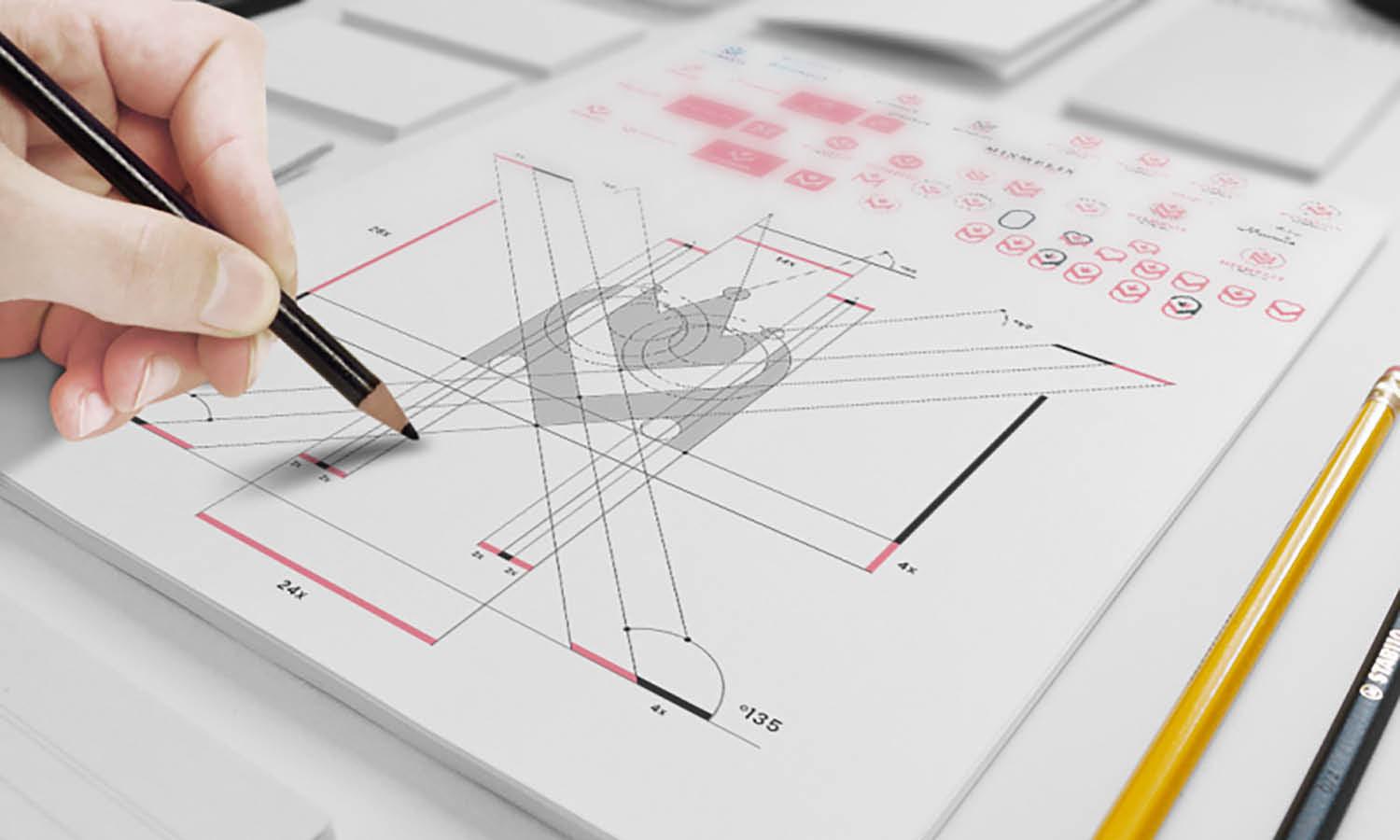

The skillset of the creator is evolving. "Prompt engineering" is becoming "AI Directing." It is no longer enough to type "a cat on a bike." The trend is moving toward granular control over camera angles, lighting physics, and motion paths.

Tools are adding controls for "Camera Pan," "Zoom Speed," and "Focus Pull." This allows creators to use cinematic language to guide the AI, resulting in outputs that feel less like computer generations and more like intentional filmmaking.

Best Practices

- Use cinematic terminology in your prompts (e.g., "Dutch angle," "Bokeh effect," "Dolly zoom").

- Treat the AI as a junior cinematographer; give it specific instructions on lighting (e.g., "Golden hour," "Volumetric lighting").

5. Specialized Micro-Utilities

While massive generation models grab headlines, there is a quiet trend toward specialized "micro-utilities." These are AI tools trained to do one thing perfectly.

Examples include AI upscalers that turn 1080p footage into 4K, frame interpolators that make choppy video smooth, or specific watermark removers. These utility players are essential for the "last mile" of production, polishing raw AI generation into broadcast-ready files. For instance, keeping up with the latest updates on tools like Runway Gen-3 helps creators understand which specific micro-tools are currently leading the market.

Conclusion

The AI video generation trends of 2025 are defined by maturity. We are moving away from chaotic experimentation toward controlled, consistent, and commercially viable workflows. The future belongs to creators who stop treating AI as a magic button and start treating it as a complex instrument. By leveraging multi-model hubs, mastering consistency, and adopting a "director's mindset," you can harness this technology to tell stories that were previously impossible.